Download Gpu Shader 30 For Pes 2013

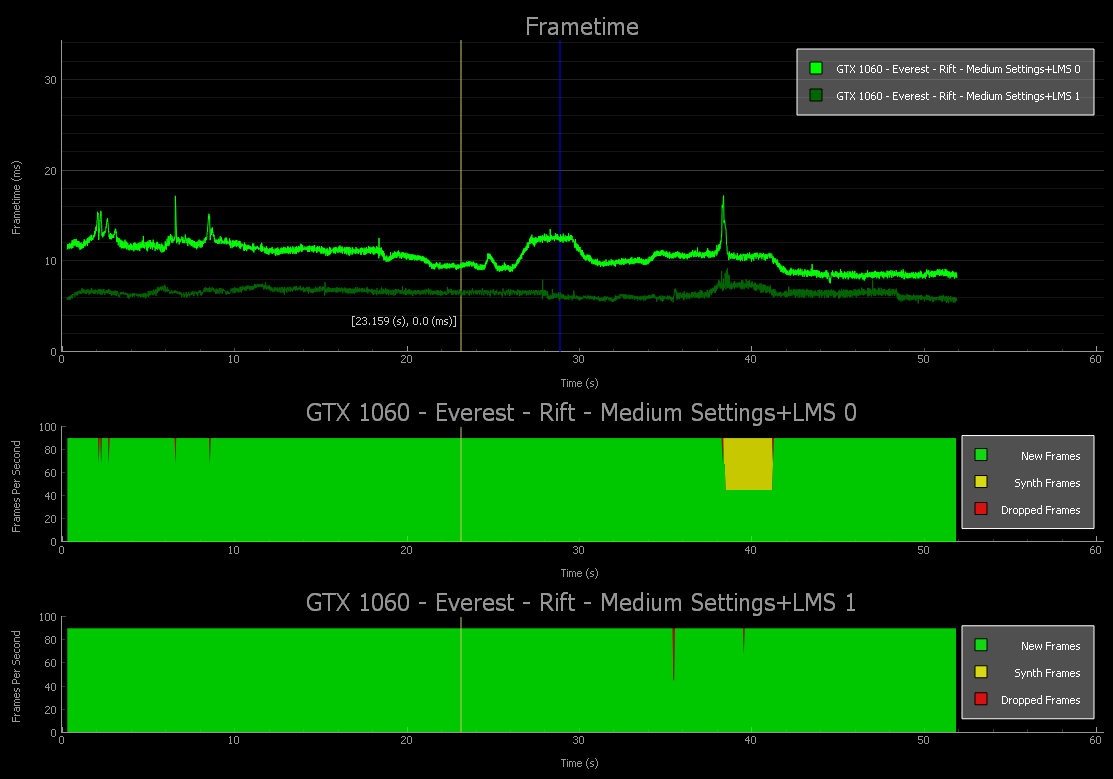

By Andrew Burnes on Wed, Mar 15 2017,,, In 2013 NVIDIA revolutionized graphics card benchmarking with the release of, a free tool that enabled gamers and reviewers to test not only FPS,. With FCAT, performance could for the first time be measured in intricate detail, revealing micro-stutters, dropped frames, incorrect multi-GPU framepacing, and much more. Now, the best GPUs are those that are fast and smooth, giving gamers a far more enjoyable experience in every game. Today we’re releasing, enabling reviewers, game developers, hardware manufacturers, and enthusiasts to reliably test the performance of Virtual Reality PC games, where fast, smooth performance prevents stuttery unresponsive gameplay, which can lead to eye strain and discomfort. Before now, Virtual Reality testing relied on general benchmarking tools, synthetic tests, and hacked-together solutions, which failed to reveal the true performance of GPUs in VR games. Operation doomsday 1999 zip. With FCAT VR, we read performance data from NVIDIA driver stats, Event Tracing for Windows (ETW) events for Oculus Rift, and SteamVR’s performance API data for HTC Vive to generate precise VR performance data on all GPUs. Using this data FCAT VR users can create charts and analyze data for frametimes, dropped frames, runtime warp dropped frames, and Asynchronous Space Warp (ASW) synthesized frames, revealing stutters, interpolation, and the experience received when gaming on any GPU in the tested Virtual Reality game.

Pro Evolution Soccer 2012 will require Radeon X800 XT Platinum graphics card with a Core 2 Duo. Soccer 2012 without 512 MB, which helps get the 30FPS You will require a DirectX 9 GPU. We suggest a 13 year old PC to play smoothly. GFX RAM: 128MB of RAM, Pixel shader 3.0, DirectX 9.0c compatible video card. Download Pro Evolution Soccer 2013 (PES 2013) Free. DirectX 9.0c compatible video card. 128 Pixel Shader 3.0. Permainan game sepak bola Pro Evolution.

An example of the VR FCAT’s performance charts If you wish to benchmark a system with FCAT VR the testing itself is straightforward, though setting up analysis of benchmark data does require a few steps. How-To Guides for all steps, along with additional information that will give you greater insight into FCAT VR testing, can be accessed below. Guide Contents: • • • • • • • • • • • • • • • • • • • • • • • • • • • • • FCAT VR Testing, In Depth Today’s leading high-end VR headsets, the Oculus Rift and HTC Vive, both refresh their screen at a fixed interval of 90 Hz, which equates to one screen refresh every ~11.1 milliseconds (ms).

VSYNC is enabled to prevent tearing, since tearing in the HMD can cause major discomfort to the user. VR software for delivering frames can be divided into two parts: the VR Game and the VR Runtime. When timing requirements are satisfied and the process works correctly, the following sequence is observed: • The VR Game samples the current headset position sensor and updates the camera position in a game to correctly track a user’s head position.

• The game then establishes a graphics frame, and the GPU renders the new frame to a texture (not the final display). • The VR Runtime reads the new texture, modifies it, and generates a final image that is displayed on the headset display.

Two of these interesting modifications include color correction and lens correction, but the work done by the VR Runtime can be much more elaborate. The following figure shows what this looks like in a timing chart.

Ideal VR Pipeline The job of the Runtime becomes significantly more complex if the time to generate a frame exceeds the refresh interval. In that case, the total elapsed time for the combined VR Game and VR Runtime is too long, and the frame will not be ready to display at the beginning of the next scan. In this case, the HMD would typically redisplay the prior rendered frame from the Runtime, but for VR that experience is unacceptable because repeating an old frame on a VR headset display ignores head motion and results in a poor user experience.

Runtimes use a variety of techniques to improve this situation, including algorithms that synthesize a new frame rather than repeat the old one. Most of the techniques center on the idea of reprojection, which uses the most recent head sensor location input to adjust the old frame to match the current head position. This does not improve the animation embedded in a frame—which will suffer from a lower frame rate and judder—but a more fluid visual experience that tracks better with head motion is presented in the HMD. FCAT VR Capture captures four key performance metrics for Rift and Vive: • Dropped Frames (also known as App Miss or App Drop) • Warp Misses • Frametime data • Asynchronous Space Warp (ASW) synthesized frames Dropped Frames Whenever the frame rendered by the VR Game arrives too late to be displayed in the current refresh interval, a Frame Drop occurs and causes the game to stutter. Understanding these drops and measuring them provides insight into VR performance.